方便更快捷的说明问题,可以按需填写(可删除)

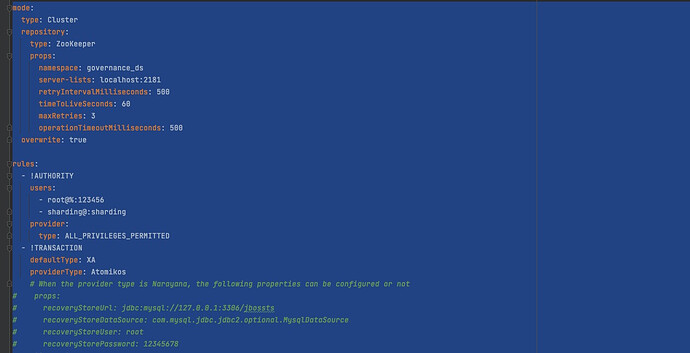

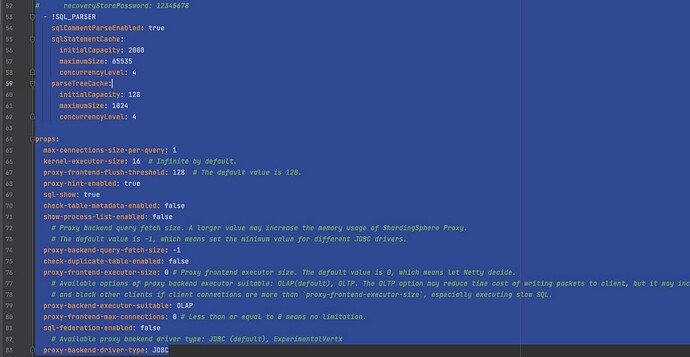

使用环境:

Server version: 5.7.22-ShardingSphere-Proxy 5.1.2-SNAPSHOT-dirty-0f6fba9 Source distribution

5.1.1版本同样出现错误,错误类型不同

场景、问题:

我有两个逻辑数据库 sharding_db 和 sharding_db2

sharding_db 对应真实数据库 ds_1 和 ds_2

sharding_db2 对应真实数据库 ds_1 和 ds_2

sharding_db 和 sharding_db2 完全一样

两个proxy 连接

connection 1 : 结果正常

mysql> use sharding_db;

Database changed

mysql> begin;

Query OK, 0 rows affected (0.06 sec)

mysql> Insert into tbl_db_migrate_record(id, msg3, msg, record) values (146, ‘146’, ‘test’, ‘[1,2,3]’),(139, ‘139’, ‘test’, ‘[1,2,3]’);

ERROR 1062 (23000): Duplicate entry ‘146’ for key ‘PRIMARY’

mysql> rollback;

Query OK, 0 rows affected (0.21 sec)

connection 2 : 运行时异常

mysql> use sharding_db2;

Database changed

mysql> begin;

Query OK, 0 rows affected (0.01 sec)

mysql> Insert into tbl_db_migrate_record(id, msg3, msg, record) values (146, ‘146’, ‘test’, ‘[1,2,3]’),(139, ‘139’, ‘test’, ‘[1,2,3]’);

ERROR 1997 (C1997): Runtime exception: [null]

现状:

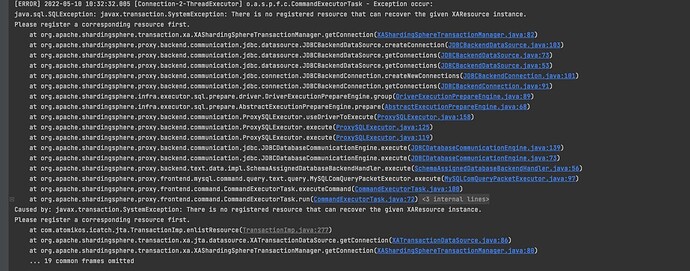

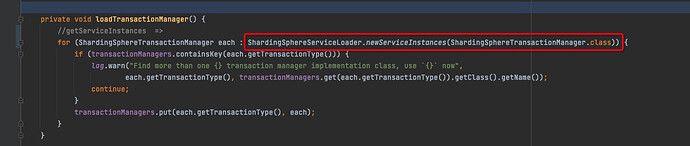

java.lang.NullPointerException: null

at org.apache.shardingsphere.transaction.xa.XAShardingSphereTransactionManager.getConnection(XAShardingSphereTransactionManager.java:80)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.datasource.JDBCBackendDataSource.createConnection(JDBCBackendDataSource.java:114)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.datasource.JDBCBackendDataSource.getConnections(JDBCBackendDataSource.java:81)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.datasource.JDBCBackendDataSource.getConnections(JDBCBackendDataSource.java:55)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.connection.JDBCBackendConnection.createNewConnections(JDBCBackendConnection.java:101)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.connection.JDBCBackendConnection.getConnections(JDBCBackendConnection.java:91)

at org.apache.shardingsphere.infra.executor.sql.prepare.driver.DriverExecutionPrepareEngine.group(DriverExecutionPrepareEngine.java:88)

at org.apache.shardingsphere.infra.executor.sql.prepare.AbstractExecutionPrepareEngine.prepare(AbstractExecutionPrepareEngine.java:68)

at org.apache.shardingsphere.proxy.backend.communication.ProxySQLExecutor.useDriverToExecute(ProxySQLExecutor.java:158)

at org.apache.shardingsphere.proxy.backend.communication.ProxySQLExecutor.execute(ProxySQLExecutor.java:125)

at org.apache.shardingsphere.proxy.backend.communication.ProxySQLExecutor.execute(ProxySQLExecutor.java:119)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.JDBCDatabaseCommunicationEngine.execute(JDBCDatabaseCommunicationEngine.java:144)

at org.apache.shardingsphere.proxy.backend.communication.jdbc.JDBCDatabaseCommunicationEngine.execute(JDBCDatabaseCommunicationEngine.java:74)

at org.apache.shardingsphere.proxy.backend.text.data.impl.SchemaAssignedDatabaseBackendHandler.execute(SchemaAssignedDatabaseBackendHandler.java:56)

at org.apache.shardingsphere.proxy.frontend.mysql.command.query.text.query.MySQLComQueryPacketExecutor.execute(MySQLComQueryPacketExecutor.java:97)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.executeCommand(CommandExecutorTask.java:107)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.run(CommandExecutorTask.java:77)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)