两个问题

- 5.1.0版本一致性校验问题:

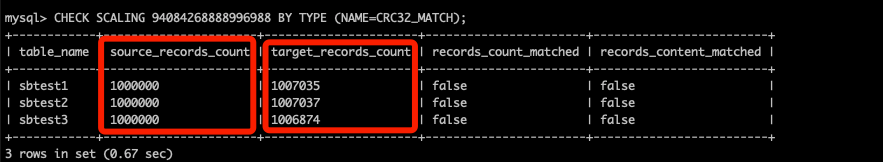

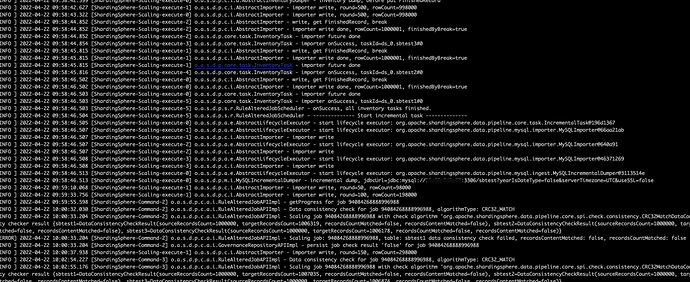

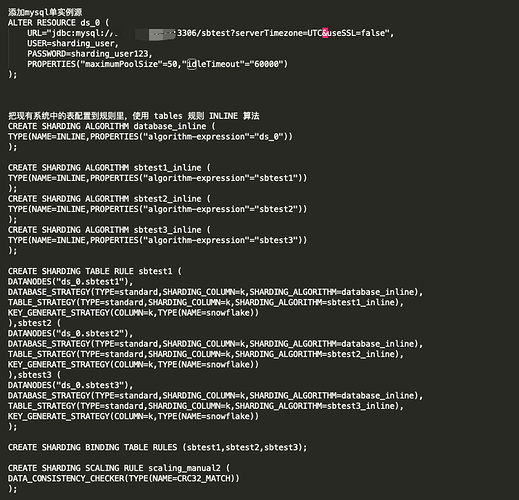

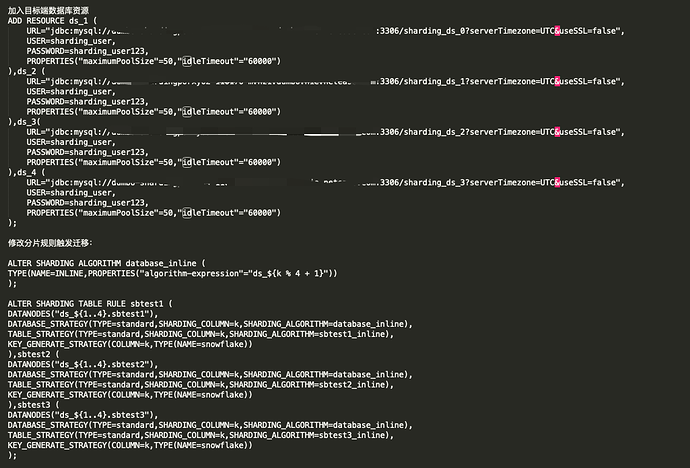

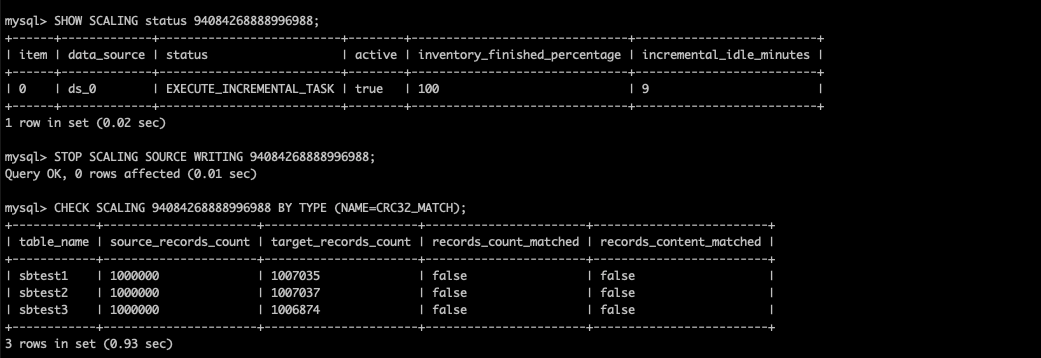

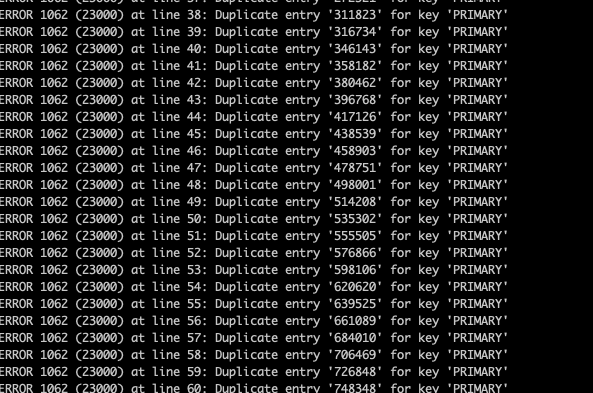

错误日志(现在用的是5.1.0版本)上游只是一个mysql单库,全量导入到四个分片的proxy的时候是check没有问题的,但是增量同步的时候就check不一致了:

[INFO ] 2022-04-22 11:57:03.206 [ShardingSphere-Scaling-execute-5] o.a.s.d.p.s.r.RuleAlteredJobScheduler - onSuccess, all inventory tasks finished.

[INFO ] 2022-04-22 11:57:03.206 [ShardingSphere-Scaling-execute-5] o.a.s.d.p.s.r.RuleAlteredJobScheduler - -------------- Start incremental task --------------

[INFO ] 2022-04-22 11:57:03.208 [ShardingSphere-Scaling-execute-0] o.a.s.d.p.a.e.AbstractLifecycleExecutor - start lifecycle executor: org.apache.shardingsphere.data.pipeline.core.task.IncrementalTask@42c9ad44

[INFO ] 2022-04-22 11:57:03.209 [ShardingSphere-Scaling-execute-1] o.a.s.d.p.a.e.AbstractLifecycleExecutor - start lifecycle executor: org.apache.shardingsphere.data.pipeline.mysql.importer.MySQLImporter@4b5cf8b1

[INFO ] 2022-04-22 11:57:03.209 [ShardingSphere-Scaling-execute-1] o.a.s.d.p.c.i.AbstractImporter - importer write

[INFO ] 2022-04-22 11:57:03.209 [ShardingSphere-Scaling-execute-2] o.a.s.d.p.a.e.AbstractLifecycleExecutor - start lifecycle executor: org.apache.shardingsphere.data.pipeline.mysql.importer.MySQLImporter@5d89eb42

[INFO ] 2022-04-22 11:57:03.209 [ShardingSphere-Scaling-execute-2] o.a.s.d.p.c.i.AbstractImporter - importer write

[INFO ] 2022-04-22 11:57:03.210 [ShardingSphere-Scaling-execute-3] o.a.s.d.p.a.e.AbstractLifecycleExecutor - start lifecycle executor: org.apache.shardingsphere.data.pipeline.mysql.importer.MySQLImporter@69d82600

[INFO ] 2022-04-22 11:57:03.210 [ShardingSphere-Scaling-execute-3] o.a.s.d.p.c.i.AbstractImporter - importer write

[INFO ] 2022-04-22 11:57:03.215 [ShardingSphere-Scaling-execute-0] o.a.s.d.p.a.e.AbstractLifecycleExecutor - start lifecycle executor: org.apache.shardingsphere.data.pipeline.mysql.ingest.MySQLIncrementalDumper@458ba40e

[INFO ] 2022-04-22 11:57:03.215 [ShardingSphere-Scaling-execute-0] o.a.s.d.p.m.i.MySQLIncrementalDumper - incremental dump, jdbcUrl=jdbc:mysql://10.90.249.77:3306/sbtest?yearIsDateType=false&serverTimezone=UTC&useSSL=false

[INFO ] 2022-04-22 11:57:52.604 [ShardingSphere-Command-1] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - getProgress for job 94091363582736229

[ERROR] 2022-04-22 11:58:00.003 [_finished_check_Worker-1] org.quartz.core.JobRunShell - Job DEFAULT._finished_check threw an unhandled Exception:

java.lang.NullPointerException: null

[ERROR] 2022-04-22 11:58:00.003 [_finished_check_Worker-1] org.quartz.core.ErrorLogger - Job (DEFAULT._finished_check threw an exception.

org.quartz.SchedulerException: Job threw an unhandled exception.

at org.quartz.core.JobRunShell.run(JobRunShell.java:213)

at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:573)

Caused by: java.lang.NullPointerException: null

[ERROR] 2022-04-22 11:59:00.003 [_finished_check_Worker-1] org.quartz.core.JobRunShell - Job DEFAULT._finished_check threw an unhandled Exception:

java.lang.NullPointerException: null

[ERROR] 2022-04-22 11:59:00.003 [_finished_check_Worker-1] org.quartz.core.ErrorLogger - Job (DEFAULT._finished_check threw an exception.

org.quartz.SchedulerException: Job threw an unhandled exception.

at org.quartz.core.JobRunShell.run(JobRunShell.java:213)

at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:573)

Caused by: java.lang.NullPointerException: null

[INFO ] 2022-04-22 11:59:04.155 [ShardingSphere-Command-2] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

[INFO ] 2022-04-22 11:59:10.938 [ShardingSphere-Command-2] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1000000, targetRecordsCount=1000000, recordsCountMatched=true, recordsContentMatched=true), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1000000, targetRecordsCount=1000000, recordsCountMatched=true, recordsContentMatched=true), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1000000, targetRecordsCount=1000000, recordsCountMatched=true, recordsContentMatched=true)}

[INFO ] 2022-04-22 11:59:10.938 [ShardingSphere-Command-2] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘true’ for job 94091363582736229

[ERROR] 2022-04-22 12:00:00.003 [_finished_check_Worker-1] org.quartz.core.JobRunShell - Job DEFAULT._finished_check threw an unhandled Exception:

java.lang.NullPointerException: null

[ERROR] 2022-04-22 12:00:00.003 [_finished_check_Worker-1] org.quartz.core.ErrorLogger - Job (DEFAULT._finished_check threw an exception.

org.quartz.SchedulerException: Job threw an unhandled exception.

at org.quartz.core.JobRunShell.run(JobRunShell.java:213)

at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:573)

Caused by: java.lang.NullPointerException: null

[INFO ] 2022-04-22 12:00:14.492 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - getProgress for job 94091363582736229

[INFO ] 2022-04-22 12:00:15.978 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - getProgress for job 94091363582736229

[INFO ] 2022-04-22 12:00:22.598 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

[INFO ] 2022-04-22 12:00:23.415 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1000538, targetRecordsCount=1001204, recordsCountMatched=false, recordsContentMatched=false), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1000550, targetRecordsCount=1001276, recordsCountMatched=false, recordsContentMatched=false), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1000548, targetRecordsCount=1001261, recordsCountMatched=false, recordsContentMatched=false)}

[ERROR] 2022-04-22 12:00:23.416 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job: 94091363582736229, table: sbtest1 data consistency check failed, recordsContentMatched: false, recordsCountMatched: false

[INFO ] 2022-04-22 12:00:23.416 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘false’ for job 94091363582736229

[INFO ] 2022-04-22 12:00:34.894 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

[INFO ] 2022-04-22 12:00:36.073 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1000772, targetRecordsCount=1001885, recordsCountMatched=false, recordsContentMatched=false), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1000794, targetRecordsCount=1001960, recordsCountMatched=false, recordsContentMatched=false), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1000802, targetRecordsCount=1001978, recordsCountMatched=false, recordsContentMatched=false)}

[ERROR] 2022-04-22 12:00:36.073 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job: 94091363582736229, table: sbtest1 data consistency check failed, recordsContentMatched: false, recordsCountMatched: false

[INFO ] 2022-04-22 12:00:36.073 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘false’ for job 94091363582736229

[INFO ] 2022-04-22 12:00:52.928 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

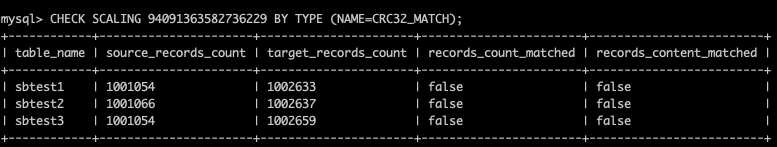

[INFO ] 2022-04-22 12:00:53.660 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002633, recordsCountMatched=false, recordsContentMatched=false), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1001066, targetRecordsCount=1002637, recordsCountMatched=false, recordsContentMatched=false), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002659, recordsCountMatched=false, recordsContentMatched=false)}

[ERROR] 2022-04-22 12:00:53.660 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job: 94091363582736229, table: sbtest1 data consistency check failed, recordsContentMatched: false, recordsCountMatched: false

[INFO ] 2022-04-22 12:00:53.660 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘false’ for job 94091363582736229

[INFO ] 2022-04-22 12:00:56.303 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

[INFO ] 2022-04-22 12:00:56.978 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002633, recordsCountMatched=false, recordsContentMatched=false), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1001066, targetRecordsCount=1002637, recordsCountMatched=false, recordsContentMatched=false), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002659, recordsCountMatched=false, recordsContentMatched=false)}

[ERROR] 2022-04-22 12:00:56.978 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job: 94091363582736229, table: sbtest1 data consistency check failed, recordsContentMatched: false, recordsCountMatched: false

[INFO ] 2022-04-22 12:00:56.979 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘false’ for job 94091363582736229

[ERROR] 2022-04-22 12:01:00.003 [_finished_check_Worker-1] org.quartz.core.JobRunShell - Job DEFAULT._finished_check threw an unhandled Exception:

java.lang.NullPointerException: null

[ERROR] 2022-04-22 12:01:00.003 [_finished_check_Worker-1] org.quartz.core.ErrorLogger - Job (DEFAULT._finished_check threw an exception.

org.quartz.SchedulerException: Job threw an unhandled exception.

at org.quartz.core.JobRunShell.run(JobRunShell.java:213)

at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:573)

Caused by: java.lang.NullPointerException: null

[INFO ] 2022-04-22 12:01:26.551 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Data consistency check for job 94091363582736229, algorithmType: CRC32_MATCH

[INFO ] 2022-04-22 12:01:27.551 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job 94091363582736229 with check algorithm ‘org.apache.shardingsphere.data.pipeline.core.spi.check.consistency.CRC32MatchDataConsistencyCheckAlgorithm’ data consistency checker result {sbtest1=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002633, recordsCountMatched=false, recordsContentMatched=false), sbtest2=DataConsistencyCheckResult(sourceRecordsCount=1001066, targetRecordsCount=1002637, recordsCountMatched=false, recordsContentMatched=false), sbtest3=DataConsistencyCheckResult(sourceRecordsCount=1001054, targetRecordsCount=1002659, recordsCountMatched=false, recordsContentMatched=false)}

[ERROR] 2022-04-22 12:01:27.551 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.RuleAlteredJobAPIImpl - Scaling job: 94091363582736229, table: sbtest1 data consistency check failed, recordsContentMatched: false, recordsCountMatched: false

[INFO ] 2022-04-22 12:01:27.551 [ShardingSphere-Command-3] o.a.s.d.p.c.a.i.GovernanceRepositoryAPIImpl - persist job check result ‘false’ for job 94091363582736229

[ERROR] 2022-04-22 12:02:00.003 [_finished_check_Worker-1] org.quartz.core.JobRunShell - Job DEFAULT._finished_check threw an unhandled Exception:

java.lang.NullPointerException: null

[ERROR] 2022-04-22 12:02:00.003 [_finished_check_Worker-1] org.quartz.core.ErrorLogger - Job (DEFAULT._finished_check threw an exception.

org.quartz.SchedulerException: Job threw an unhandled exception.

at org.quartz.core.JobRunShell.run(JobRunShell.java:213)

at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:573)

Caused by: java.lang.NullPointerException: null

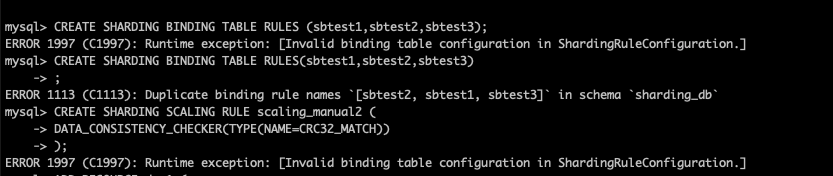

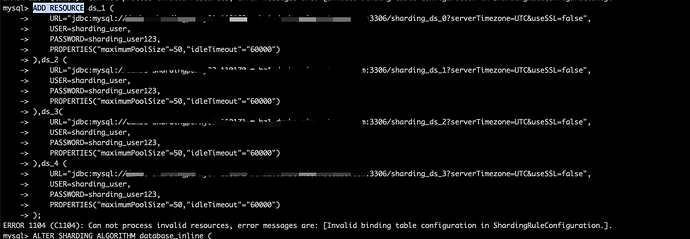

- 使用5.1.1版本时 在绑定表时报错(在5.1.0是没有报错的)

错误日志:

[ERROR] 2022-04-22 12:23:17.893 [ShardingSphere-Command-0] o.a.s.p.f.c.CommandExecutorTask - Exception occur:

java.lang.IllegalArgumentException: Invalid binding table configuration in ShardingRuleConfiguration.

at com.google.common.base.Preconditions.checkArgument(Preconditions.java:142)

at org.apache.shardingsphere.sharding.rule.ShardingRule.(ShardingRule.java:125)

at org.apache.shardingsphere.sharding.rule.builder.ShardingRuleBuilder.build(ShardingRuleBuilder.java:41)

at org.apache.shardingsphere.sharding.rule.builder.ShardingRuleBuilder.build(ShardingRuleBuilder.java:35)

at org.apache.shardingsphere.infra.rule.builder.schema.SchemaRulesBuilder.buildRules(SchemaRulesBuilder.java:63)

at org.apache.shardingsphere.mode.metadata.MetaDataContextsBuilder.getSchemaRules(MetaDataContextsBuilder.java:105)

at org.apache.shardingsphere.mode.metadata.MetaDataContextsBuilder.addSchema(MetaDataContextsBuilder.java:83)

at org.apache.shardingsphere.mode.manager.ContextManager.buildChangedMetaDataContext(ContextManager.java:495)

at org.apache.shardingsphere.mode.manager.ContextManager.alterRuleConfiguration(ContextManager.java:267)

at org.apache.shardingsphere.proxy.backend.text.distsql.rdl.rule.RuleDefinitionBackendHandler.processSQLStatement(RuleDefinitionBackendHandler.java:121)

at org.apache.shardingsphere.proxy.backend.text.distsql.rdl.rule.RuleDefinitionBackendHandler.execute(RuleDefinitionBackendHandler.java:95)

at org.apache.shardingsphere.proxy.backend.text.distsql.rdl.rule.RuleDefinitionBackendHandler.execute(RuleDefinitionBackendHandler.java:60)

at org.apache.shardingsphere.proxy.backend.text.SchemaRequiredBackendHandler.execute(SchemaRequiredBackendHandler.java:51)

at org.apache.shardingsphere.proxy.frontend.mysql.command.query.text.query.MySQLComQueryPacketExecutor.execute(MySQLComQueryPacketExecutor.java:97)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.executeCommand(CommandExecutorTask.java:100)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.run(CommandExecutorTask.java:72)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

[ERROR] 2022-04-22 12:25:20.077 [ShardingSphere-Command-1] o.a.s.p.f.c.CommandExecutorTask - Exception occur:

org.apache.shardingsphere.infra.distsql.exception.rule.DuplicateRuleException: Duplicate binding rule names [sbtest2, sbtest1, sbtest3] in schema sharding_db

at org.apache.shardingsphere.sharding.distsql.handler.update.CreateShardingBindingTableRuleStatementUpdater.checkToBeCreatedDuplicateBindingTables(CreateShardingBindingTableRuleStatementUpdater.java:77)

at org.apache.shardingsphere.sharding.distsql.handler.update.CreateShardingBindingTableRuleStatementUpdater.checkSQLStatement(CreateShardingBindingTableRuleStatementUpdater.java:48)

at org.apache.shardingsphere.sharding.distsql.handler.update.CreateShardingBindingTableRuleStatementUpdater.checkSQLStatement(CreateShardingBindingTableRuleStatementUpdater.java:40)

at org.apache.shardingsphere.proxy.backend.text.distsql.rdl.rule.RuleDefinitionBackendHandler.execute(RuleDefinitionBackendHandler.java:82)

at org.apache.shardingsphere.proxy.backend.text.distsql.rdl.rule.RuleDefinitionBackendHandler.execute(RuleDefinitionBackendHandler.java:60)

at org.apache.shardingsphere.proxy.backend.text.SchemaRequiredBackendHandler.execute(SchemaRequiredBackendHandler.java:51)

at org.apache.shardingsphere.proxy.frontend.mysql.command.query.text.query.MySQLComQueryPacketExecutor.execute(MySQLComQueryPacketExecutor.java:97)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.executeCommand(CommandExecutorTask.java:100)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.run(CommandExecutorTask.java:72)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

[ERROR] 2022-04-22 12:25:44.031 [ShardingSphere-Command-1] o.a.s.p.f.c.CommandExecutorTask - Exception occur