ShardingSphere-Proxy Base 事务基于 Seata 验证

About SEATA 柔性事务

可参考:Seata 柔性事务 :: ShardingSphere

服务构建

服务规划

| 服务 | IP | 备注 |

|---|---|---|

| Sysbench | 127.0.0.1 | Version:1.0.20 |

| MySQL | 127.0.0.1 | Version:5.7.26 |

| ShardingSphere-Proxy | 127.0.0.1 | GitHub - apache/shardingsphere: Build criterion and ecosystem above multi-model databases master |

| Seata | 127.0.0.1 | Version:1.4.2 |

ShardingSphere-Proxy 构建配置

Proxy 获取

可参考:

How to Set Up Your DEV Environment

conf 相关

server.yaml

######################################################################################################

#

# If you want to configure governance, authorization and proxy properties, please refer to this file.

#

######################################################################################################

# mode:

# type: Cluster

# repository:

# type: Zookeeper

# props:

# namespace: governance_ds

# server-lists: localhost:2181

# retryIntervalMilliseconds: 500

# timeToLiveSeconds: 60

# maxRetries: 3

# operationTimeoutMilliseconds: 500

# overwrite: true

rules:

- !AUTHORITY

users:

- root@%:root

- sharding@:sharding

provider:

type: NATIVE

#scaling:

# blockQueueSize: 10000

# workerThread: 40

- !TRANSACTION

defaultType: BASE

providerType: Seata

props:

max-connections-size-per-query: 1

sql-show: true

# executor-size: 16 # Infinite by default.

# proxy-frontend-flush-threshold: 128 # The default value is 128.

# # LOCAL: Proxy will run with LOCAL transaction.

# # XA: Proxy will run with XA transaction.

# # BASE: Proxy will run with B.A.S.E transaction.

# proxy-transaction-type: LOCAL

# xa-transaction-manager-type: Atomikos

# proxy-opentracing-enabled: false

# proxy-hint-enabled: false

# sql-show: false

# check-table-metadata-enabled: false

# lock-wait-timeout-milliseconds: 50000 # The maximum time to wait for a lock

# # Proxy backend query fetch size. A larger value may increase the memory usage of ShardingSphere Proxy.

# # The default value is -1, which means set the minimum value for different JDBC drivers.

# proxy-backend-query-fetch-size: -1

config-sharding.yaml

schemaName: sbtest_sharding

dataSources:

ds_0:

url: jdbc:mysql://127.0.0.1:3306/sbtest_sharding?useSSL=false&useServerPrepStmts=true&cachePrepStmts=true&prepStmtCacheSize=8192&prepStmtCacheSqlLimit=1024

username: root

password: passwd

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 3000

minPoolSize: 1

ds_1:

url: jdbc:mysql://127.0.0.2:3306/sbtest_sharding?useSSL=false&useServerPrepStmts=true&cachePrepStmts=true&prepStmtCacheSize=8192&prepStmtCacheSqlLimit=1024

username: root

password: passwd

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 3000

minPoolSize: 1

rules:

- !SHARDING

tables:

sbtest1:

actualDataNodes: ds_${0..1}.sbtest1_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_1

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest2:

actualDataNodes: ds_${0..1}.sbtest2_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_2

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest3:

actualDataNodes: ds_${0..1}.sbtest3_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_3

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest4:

actualDataNodes: ds_${0..1}.sbtest4_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_4

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest5:

actualDataNodes: ds_${0..1}.sbtest5_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_5

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest6:

actualDataNodes: ds_${0..1}.sbtest6_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_6

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest7:

actualDataNodes: ds_${0..1}.sbtest7_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_7

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest8:

actualDataNodes: ds_${0..1}.sbtest8_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_8

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest9:

actualDataNodes: ds_${0..1}.sbtest9_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_9

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

sbtest10:

actualDataNodes: ds_${0..1}.sbtest10_${0..9}

tableStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: table_inline_10

keyGenerateStrategy:

column: id

keyGeneratorName: snowflake

defaultDatabaseStrategy:

standard:

shardingColumn: id

shardingAlgorithmName: database_inline

shardingAlgorithms:

database_inline:

type: INLINE

props:

algorithm-expression: ds_${id & 1}

table_inline_1:

type: INLINE

props:

algorithm-expression: sbtest1_${id % 10}

table_inline_2:

type: INLINE

props:

algorithm-expression: sbtest2_${id % 10}

table_inline_3:

type: INLINE

props:

algorithm-expression: sbtest3_${id % 10}

table_inline_4:

type: INLINE

props:

algorithm-expression: sbtest4_${id % 10}

table_inline_5:

type: INLINE

props:

algorithm-expression: sbtest5_${id % 10}

table_inline_6:

type: INLINE

props:

algorithm-expression: sbtest6_${id % 10}

table_inline_7:

type: INLINE

props:

algorithm-expression: sbtest7_${id % 10}

table_inline_8:

type: INLINE

props:

algorithm-expression: sbtest8_${id % 10}

table_inline_9:

type: INLINE

props:

algorithm-expression: sbtest9_${id % 10}

table_inline_10:

type: INLINE

props:

algorithm-expression: sbtest10_${id % 10}

keyGenerators:

snowflake:

type: SNOWFLAKE

props:

worker-id: 123

seata.conf

client {

application.id = id

transaction.service.group = my_test_tx_group

}

file.conf

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "file"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

user = "root"

password = "123456"

minConn = 5

maxConn = 100

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

host = "127.0.0.1"

port = "6379"

password = ""

database = "0"

minConn = 1

maxConn = 10

maxTotal = 100

queryLimit = 100

}

}

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

}

service {

vgroupMapping.my_test_tx_group = "default"

#only support when registry.type=file, please don't set multiple addresses

default.grouplist = "127.0.0.1:8091"

#degrade, current not support

enableDegrade = false

#disable seata

disableGlobalTransaction = false

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

}

registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "file"

loadBalance = "RandomLoadBalance"

loadBalanceVirtualNodes = 10

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = ""

password = ""

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

group = "SEATA_GROUP"

username = ""

password = ""

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

appId = "seata-server"

apolloMeta = "http://192.168.1.204:8801"

namespace = "application"

apolloAccesskeySecret = ""

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

lib 相关

#### 增加以下包

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.26/mysql-connector-java-8.0.26.jar

wget https://repo1.maven.org/maven2/com/github/ben-manes/caffeine/caffeine/2.7.0/caffeine-2.7.0.jar

wget https://repo1.maven.org/maven2/io/seata/seata-all/1.4.2/seata-all-1.4.2.jar

wget https://repo1.maven.org/maven2/org/apache/shardingsphere/shardingsphere-infra-optimize/5.0.0/shardingsphere-infra-optimize-5.0.0.jar

wget https://repo1.maven.org/maven2/cglib/cglib/3.1/cglib-3.1.jar

wget https://repo1.maven.org/maven2/org/apache/commons/commons-pool2/2.9.0/commons-pool2-2.9.0.jar

wget https://repo1.maven.org/maven2/commons-pool/commons-pool/1.6/commons-pool-1.6.jar

wget https://repo1.maven.org/maven2/com/typesafe/config/1.2.1/config-1.2.1.jar

#### 删除以下包,否则会有冲突

[root@localhost lib]# ll -thrl | grep xa

-rw-r--r--. 1 root root 44K Nov 27 12:36 shardingsphere-transaction-xa-core-5.0.1-SNAPSHOT.jar

-rw-r--r--. 1 root root 11K Nov 27 12:36 shardingsphere-transaction-xa-spi-5.0.1-SNAPSHOT.jar

-rw-r--r--. 1 root root 13K Nov 27 12:36 shardingsphere-transaction-xa-atomikos-5.0.1-SNAPSHOT.jar

### 获取 shardingsphere-transaction-base-seata-at-5.0.1-SNAPSHOT.jar

shardingsphere-transaction-base-seata-at-5.0.1-SNAPSHOT.jar

git clone https://github.com/apache/shardingsphere.git

cd shardingsphere

./mvnw -Dmaven.javadoc.skip=true -Djacoco.skip=true -DskipITs -DskipTests clean install -T1C -Prelease

ll ./shardingsphere-kernel/shardingsphere-transaction/shardingsphere-transaction-type/shardingsphere-transaction-base/shardingsphere-transaction-base-seata-at/target/shardingsphere-transaction-base-seata-at-5.0.1-SNAPSHOT.jar

wget https://repo1.maven.org/maven2/org/apache/shardingsphere/shardingsphere-transaction-base-seata-at/5.0.0/shardingsphere-transaction-base-seata-at-5.0.0.jar

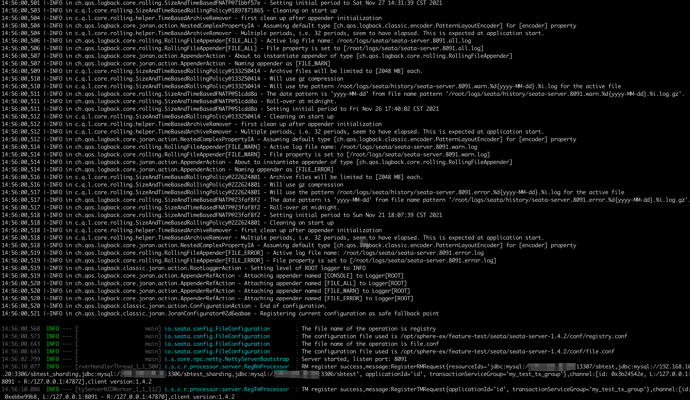

Seata 构建

docker启动

docker run -d --name seata-server -p 8091:8091 seataio/seata-server:1.4.2

容器命令行及查看日志

$ docker exec -it seata-server sh

$ docker logs -f seata-server

直接部署

https://github.com/seata/seata/archive/refs/tags/v1.4.2.zip

unzip v1.4.2.zip

sh ./bin/seata-server.sh -p 8091 -h 127.0.0.1 -m file

参考:https://seata.io/zh-cn/docs/ops/deploy-server.html

DB 配置

MySQL 建表语句

### proxy中的每个ds 建表

CREATE TABLE undo_log (id bigint(20) NOT NULL AUTO_INCREMENT,branch_id bigint(20) NOT NULL,xid varchar(100) NOT NULL,context varchar(128) NOT NULL,rollback_info longblob NOT NULL,log_status int(11) NOT NULL,log_created datetime NOT NULL,log_modified datetime NOT NULL,ext varchar(100) DEFAULT NULL,PRIMARY KEY (id),UNIQUE KEY ux_undo_log (xid,branch_id)) AUTO_INCREMENT=1 ;

PostgreSQL 建表语句

#### Proxy 的每个 ds 均需创建

CREATE TABLE "public"."undo_log" (

"id" SERIAL NOT NULL primary key,

"branch_id" int8 NOT NULL,

"xid" varchar(100) COLLATE "default" NOT NULL,

"context" varchar(128) COLLATE "default" NOT NULL,

"rollback_info" bytea NOT NULL,

"log_status" int4 NOT NULL,

"log_created" timestamp(6) NOT NULL,

"log_modified" timestamp(6) NOT NULL,

"ext" varchar(100) COLLATE "default" DEFAULT NULL::character varying,

CONSTRAINT "unq_idx_ul_branchId_xid" UNIQUE ("branch_id", "xid")

)

WITH (OIDS=FALSE)

;

ALTER TABLE "public"."undo_log" OWNER TO "postgres";

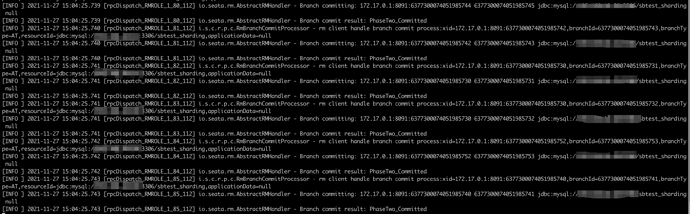

压测过程

### seata

./seata-server.sh -h 127.0.0.1 -p 8091 -m file > ../seata.log &

### proxy

./bin/start.sh

### sysbench

sysbench oltp_read_write --mysql-host=127.0.0.1 --mysql-port=3307 --mysql-user=root --mysql-password=root --mysql-db=sbtest_sharding --tables=10 --table-size=1000000 --report-interval=10 --time=10 --threads=1 --max-requests=0 --percentile=99 --rand-type=uniform --range_selects=off --auto_inc=off run